Part 2: Practical Applications and Strategic Implications

By Lawrence Agbemabiese, PhD

Founder, Policy & Planning Associates | AISESA AI Working Group

Recapping the Journey

In Part 1, we explored how human-AI co-intelligence produced a governance framework mapping for the AISESA AI Working Group. What began as a Friday evening experiment—“if only we could catch us a specialized app agent and coax it to do the heavy lifting”—became a demonstration of effective human-AI collaboration.

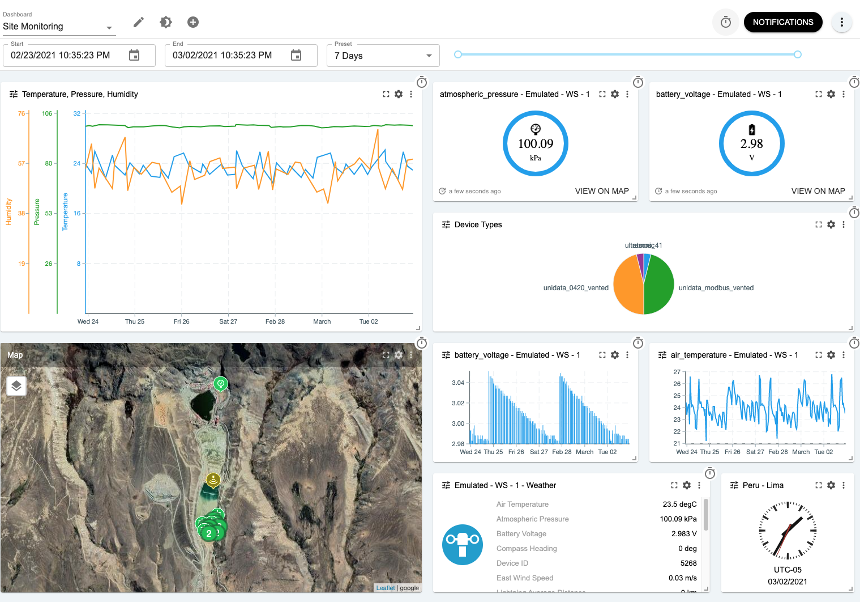

So now, let’s take a closer look at the framework itself; we shall walk-through an enchanted landscape of implications for African development practice. And what have we here, this first structure? Observe!

A Visual Guide to the…

This matrix is actually more than a categorization tool; it’s a decision-making scaffold! It helps practitioners determine not just whether to use AI, but how to deploy it responsibly.

From Abstract to Applied: African Energy Systems Use Cases

Okay, let us illustrate how this framework guides real decisions in our work on African sustainable energy systems. In this context, nothing beats a good old case study. We have four of them.

Case 1: Renewable Energy Site Selection (HIGH STAKES + HARD TO VERIFY → ESCALATE)

The Task: Identifying optimal locations for solar mini-grids in rural Nigeria.

Why High Stakes?

- Millions of dollars in infrastructure investment

- Affects energy access for thousands of people

- Wrong decisions entrench energy poverty

Why Hard to Verify What the AI Produces?

- Multiple complex variables: solar irradiation, grid proximity, population density, economic activity, political stability, land ownership, environmental impact

- Data quality varies significantly across sources

- Local knowledge is critical but not always codified

TRUST Framework Application:

Admit it — before the framework, you might have succumbed to the following temptation: Just use AI to analyze satellite data, demographic information, and economic indicators, then implement its recommendations. Big mistake.

With TRUST, we recognize Case 1 belongs in “ESCALATE/ABSTAIN” space where:

- Task Stakes: Very high—infrastructure decisions shape communities for decades

- Redundancy: Require multiple AI models (e.g., compare Google’s Gemini Deep Think with Claude’s Extended Thinking Mode) PLUS domain experts PLUS community consultation

- Uncertainty Budget: Very low—we need near-certainty before committing resources

- Source Gravity: Critical—verify satellite data, cross-check with ground surveys, validate demographic data with local authorities

- Tooling & Governance: Multi-stage approval: AI analysis → Expert review → Community validation → Stakeholder sign-off → Pilot implementation before full scale

Outcome: AI accelerates the analysis (weeks instead of months), but humans make the final call based on triangulated evidence. If uncertainty remains high after all verification steps, we abstain from that location and gather more data.

Case 2: Energy Policy Document Drafting (HIGH STAKES + EASY TO VERIFY → ACCELERATE THEN CERTIFY)

The Task: Drafting policy briefs on renewable energy integration for African governments.

Why High Stakes?

- Influences national policy

- Affects investor confidence. Repeat after me, “INVESTOR.CONFIDENCE.”

- Shapes regulatory frameworks

Why Easy to Verify?

- Factual claims can be checked against sources

- Policy coherence is verifiable by experts

- Outputs are documents (visible, reviewable)

TRUST Framework Application:

- Task Stakes: High—bad policy advice can derail national energy transitions

- Redundancy: Version control all drafts; maintain an audit trail of AI inputs

- Uncertainty Budget: Low—require accuracy, but acknowledge AI can draft well with human oversight

- Source Gravity: High—cite authoritative sources (IEA, IRENA, AU policy documents)

- Tooling & Governance: Use Claude for initial drafting → Expert review and fact-checking → Stakeholder consultation → Final sign-off with clear attribution

Outcome: AI as co-Intelligence drafts the document in hours instead of days. Humans verify every factual claim, ensure policy coherence, adapt language for local context, and take responsibility for final content. The efficiency gain is massive, but accountability remains firmly human.

Case 3: Meeting Notes Translation (LOW STAKES + EASY TO VERIFY → AUTOMATE)

The Task: Translating AISESA working group meeting notes from English to French for Francophone African Brothers and Sisters

Why Low Stakes?

- Internal document

- Errors are easily caught and corrected

- No policy or resource decisions depend directly on translation

Why Easy to Verify?

- Bilingual team members can spot-check

- Translation errors are obvious to French philosophers i mean, speakers

- Context makes meaning clear even if translation is imperfect. Remember what Chief Sokona said in 2012, “…obsession with perfection is the enemy of the good.”

TRUST Framework Application:

- Task Stakes: Low—communication tool for informed colleagues

- Redundancy: Minimal—spot-check first paragraph and random sections

- Uncertainty Budget: High—we can tolerate minor errors

- Source Gravity: Low—source document is our own meeting notes

- Tooling & Governance: Use free AI translation (Google Translate, DeepL) → Quick spot-check → Distribute

Outcome: What used to take hours (manual translation or paying for professional services) now takes minutes. Occasional minor errors are caught by readers and corrected informally. The efficiency gain vastly outweighs the minimal risk.

Case 4: Renewable Energy Project Brainstorming (LOW STAKES + HARD TO VERIFY → AUGMENT)

The Task: Generating innovative ideas for community-owned sustainable energy (a.k.a renewable energy PLUS energy efficiency) cooperatives in East Africa.

Why Low Stakes?

- Brainstorming phase, not implementation

- Ideas will be filtered through extensive analysis before any action

- No resources committed based on initial ideas

Why Hard to Verify?

- Innovation — by definition — lacks precedent to verify against

- Feasibility depends on many context-specific factors

- AI may suggest ideas that sound good but aren’t locally appropriate

TRUST Framework Application:

- Task Stakes: Low—we’re just generating options to explore

- Redundancy: Keep human judgment engaged throughout the brainstorming

- Uncertainty Budget: High—we’re explicitly seeking wild ideas

- Source Gravity: Moderate—AI draws on global knowledge but humans filter for local relevance

- Tooling & Governance: Use ChatGPT or Gemini Voice Mode → Human evaluates each idea in real-time → Capture promising concepts for deeper analysis

Outcome: AI generates 50 ideas in 30 minutes. Humans choose the 3-5 most promising for deeper exploration, “polishing” along the way. The combination of AI divergent thinking and human convergent judgment laced with our natural creative streaks accelerates innovation.

Strategic Implications for Africa’s AI Future

These cases, by no means exhaustive, illustrate broader principles for how Africa can harness AI for sustainable development:

1. Context is King

Far be it from me to push this TRUST framework as a prescription for malaria, i mean, underdevelopment. It’s an adaptive non-linear response to the inherent complexity of the challenge facing us. What counts as “high stakes” varies by context. A policy brief for a national government is high stakes. A draft agenda for an internal working group meeting is low stakes. African practitioners must inject local context into every AI deployment decision.

2. Governance Precedes Deployment

Our AISESA AI working group developed the TRUST framework before widespread AI adoption in our projects. It is a work in progress. And we need to move fast or it will be too late: governance frameworks should guide deployment, not emerge reactively after massive problems occur.

For Africa, this means: as we build AI capacity, we must simultaneously build AI governance capacity. The AU’s emerging AI strategy should embed frameworks like AISESA’s TRUST from the outset.

3. Sovereignty Through Frameworks

When African institutions develop and apply our own governance frameworks, we assert AI sovereignty — the ability to determine how AI serves our development priorities, not merely adopting frameworks designed elsewhere.

TRUST is deliberately simple and adaptable. It doesn’t require massive AI infrastructure or deep technical expertise. It requires clear thinking about stakes, uncertainty, and accountability. This makes it accessible to resource-constrained African institutions.

4. Co-Intelligence as Accelerator, Not Replacement

The story, as narrated in Part 1, of how we built this matrix demonstrates AI’s proper role: the amplification through multiplication of human capacities. Writ large, It. Is. Not. Replacement. Our AI Working Group provided context, judgment, and constraints. Claude was then commanded to provide speed, pattern recognition, and systematic application. Together, we accomplished in 90 minutes what might have taken 8 hours alone.

For Africa, where we face urgent development challenges but limited human capacity in many domains, co-intelligence offers a path to do more with what we have without sacrificing quality or local appropriateness.

5. Open Tools, Local Adaptation

We used Ethan Mollick’s guide (developed in a Western context) but adapted it to African energy and development priorities through our TRUST lens. This model — leveraging global knowledge and filtering through local frameworks — should characterize Africa’s AI strategy.

We don’t need to reinvent every AI tool. But we do need frameworks to govern their application in our diverse living realities.

The Road Ahead: From Framework to Practice

Our AISESA AI Working Group is now moving from framework development to practical implementation. Our next steps include:

- Member Testing: Each working group member will experiment with AI tools from Mollick’s guide, document their experiences using TRUST criteria, and share case studies.

- Framework Refinement: We’ll adjust the framework based on real-world African energy use cases, asking: Are the quadrants correctly defined? Are the TRUST criteria comprehensive? What’s missing?

- Capacity Building: We’ll develop training materials to help other African energy researchers and practitioners apply TRUST in their work.

- Knowledge Sharing: We’re preparing a perspectives piece for publication in early 2026, sharing our approach with the broader African energy and development community.

- Integration with AU Strategy: We’re exploring how TRUST and similar frameworks can inform the African Union’s AI governance strategy, particularly for sustainable development applications.

A Call to Action for African AI Scholars and Practitioners

This journey from one Friday evening curiosity to functional governance framework offers a model: Africans leading our own AI governance innovation, informed by global knowledge but rooted in local priorities.

I invite colleagues across African research, policy, and practice communities to:

- Experiment with co-intelligence approaches in your domains

- Develop governance frameworks suited to your contexts

- Share your learnings so we build collective African AI capacity

- Collaborate across borders and disciplines—the challenges are too big for any institution to solve alone

- Assert that Africa’s sustainable development agenda drives our AI strategy, not the reverse

The AU’s Agenda 2063 envisions an integrated, prosperous, peaceful Africa. AI can accelerate progress toward that vision if we deploy it wisely, governed by frameworks that reflect our values and serve our priorities.

The TRUST matrix is one small contribution to this larger agenda. The process leading up to it demonstrates one way to grow AI capacity, bring together creative potentials that are present but hidden and widely scattered. Discover what exists, Create what is not. Integrate.

Conclusion: From “Waiting for Godot” to “Building Our Future”

In an earlier email to the AISESA working group, I quoted advice I received from Dr. Youba Sokona years ago: “Don’t make perfection the enemy of the good.” My takeaway: “Act now on workable solutions to wicked problems, and don’t waste time ‘waiting for Godot’!”

This spirit characterized our Friday night AI experiment: Don’t wait for the perfect governance framework. Build a workable one. Test it. Refine it. Share it.

Africa doesn’t have the luxury of waiting until AI governance questions are perfectly resolved elsewhere. We face urgent challenges now—energy poverty, climate vulnerability, food insecurity, health crises. AI offers tools to address these challenges, but only if we govern its use wisely.

The TRUST matrix emerged from human-AI co-intelligence: my questions and judgment combined with Claude’s processing power and pattern recognition. This collaboration model—humans setting direction, AI multiplying capacity—offers a template for how Africa can harness AI for sustainable development.

I wrote to Claude Code after completing the matrix: “YES!” The Co-Intellience responded esponded: “🙌 HIGH FIVE! 🙌”

That simple exchange captures something profound: effective human-AI collaboration is celebratory, iterative, and productive. It’s not about humans versus AI or humans replaced by AI. It’s about humans and AI working together to solve problems neither could solve as well alone.

For Africa, this is the path forward: co-intelligence guided by governance frameworks rooted in our values, serving our sustainable development agenda, accelerating our progress toward AU Agenda 2063.

The future is not something we wait for. It’s something we build — with whatever tools we have, including AI, governed by frameworks we design.

Let’s build it together.

Acknowledgments:

This work emerged from discussions within the AISESA AI Working Group, including Sonja Klinsky (ASU), Youba Sokona, Yacob Mulugetta (UCL), and many other colleagues committed to advancing Africa’s sustainable energy future. Special thanks to Ethan Mollick for his comprehensive AI guide that sparked this exploration, and to Anthropic’s Claude for being an excellent co-intelligence partner.

Invitation:

If you’re working on AI governance for African development contexts, we’d love to hear from you. Let’s share frameworks, learn from each other’s experiments, and build collectively.

Contact: asasilogic@gmail.com | agbe@udel.edu | https://agenticppa.com/blog/

Appendix: TRUST Checklist for Practitioners

Before deploying AI in any African development task, ask:

- T (Task Stakes): What happens if the AI is wrong? Who is affected? What are the consequences?

- R (Redundancy): Do we have backup verification mechanisms? Can we cross-check AI outputs?

- U (Uncertainty Budget): How much error can we tolerate? What’s our acceptable confidence threshold?

- S (Source Gravity): How authoritative and verifiable do our information sources need to be? Can we verify AI’s sources?

- T (Tooling & Governance): What oversight, approval, and audit processes are needed? Who takes responsibility?

Based on your answers, place the task in the appropriate quadrant and apply the corresponding governance protocols:

- AUTOMATE: Low stakes + Easy to verify → Use AI freely with spot-checks

- AUGMENT: Low stakes + Hard to verify → Keep human in the loop at all times

- ACCELERATE THEN CERTIFY: High stakes + Easy to verify → AI accelerates, humans certify

- ESCALATE/ABSTAIN: High stakes + Hard to verify → Multi-layer verification; abstain if uncertainty remains high

End of Part 2

Complete TRUST Matrix documentation and discussion questions are available at: [Include link to AISESA working group resources]

Read Part 1: “From Curiosity to Framework: How Human-AI Collaboration Built a Governance Tool for Africa’s Development”